Chapter 4

(auto-generated TOC)

Chapter 5

(auto-generated TOC)

Chapter 6

(auto-generated TOC)

Chapter 7

(auto-generated TOC)

Chapter 8

(auto-generated TOC)

Chapter 10

(auto-generated TOC)

Chapter 11

(auto-generated TOC)

Chapter 12

(auto-generated TOC)

Chapter 15

(auto-generated TOC)

Chapter 17

(auto-generated TOC)

Chapter 18

(auto-generated TOC)

Chapter 19

(auto-generated TOC)

Chapter 20

(auto-generated TOC)

Chapter 21

(auto-generated TOC)

Chapter 23

(auto-generated TOC)

A brief introduction

(automatically generated title slide)

A brief introduction

This was initially written by Jérôme Petazzoni to support in-person, instructor-led workshops and tutorials

Credit is also due to multiple contributors — thank you!

I recommend using the Slack Chat to help you ...

... And be comfortable spending some time reading the Kubernetes documentation ...

... And looking for answers on StackOverflow and other outlets

Hands on, you shall practice

Nobody ever became a Jedi by spending their lives reading Wookiepedia

Likewise, it will take more than merely reading these slides to make you an expert

These slides include tons of exercises and examples

They assume that you have access to a Kubernetes cluster

Pre-requirements

(automatically generated title slide)

Pre-requirements

Be comfortable with the UNIX command line

navigating directories

editing files

a little bit of bash-fu (environment variables, loops)

Some Docker knowledge

docker run,docker ps,docker buildideally, you know how to write a Dockerfile and build it

(even if it's aFROMline and a couple ofRUNcommands)

It's totally OK if you are not a Docker expert!

Tell me and I forget.

Teach me and I remember.

Involve me and I learn.

Misattributed to Benjamin Franklin

(Probably inspired by Chinese Confucian philosopher Xunzi)

Hands-on exercises

The whole workshop is hands-on, with "exercies"

You are invited to reproduce these exercises with me

All exercises are identified with a dashed box plus keyboard icon

This is the stuff you're supposed to do!

Go to https://slides.kubernetesmastery.com to view these slides

Join the chat room: Slack

What and why of orchestration

(automatically generated title slide)

What and why of orchestration

There are many computing orchestrators

They make decisions about when and where to "do work"

What and why of orchestration

There are many computing orchestrators

They make decisions about when and where to "do work"

We've done this since the dawn of computing: Mainframe schedulers, Puppet, Terraform, AWS, Mesos, Hadoop, etc.

What and why of orchestration

There are many computing orchestrators

They make decisions about when and where to "do work"

We've done this since the dawn of computing: Mainframe schedulers, Puppet, Terraform, AWS, Mesos, Hadoop, etc.

Since 2014 we've had a resurgence of new orchestration projects because:

What and why of orchestration

There are many computing orchestrators

They make decisions about when and where to "do work"

We've done this since the dawn of computing: Mainframe schedulers, Puppet, Terraform, AWS, Mesos, Hadoop, etc.

Since 2014 we've had a resurgence of new orchestration projects because:

- Popularity of distributed computing

What and why of orchestration

There are many computing orchestrators

They make decisions about when and where to "do work"

We've done this since the dawn of computing: Mainframe schedulers, Puppet, Terraform, AWS, Mesos, Hadoop, etc.

Since 2014 we've had a resurgence of new orchestration projects because:

Popularity of distributed computing

Docker containers as a app package and isolated runtime

What and why of orchestration

There are many computing orchestrators

They make decisions about when and where to "do work"

We've done this since the dawn of computing: Mainframe schedulers, Puppet, Terraform, AWS, Mesos, Hadoop, etc.

Since 2014 we've had a resurgence of new orchestration projects because:

Popularity of distributed computing

Docker containers as a app package and isolated runtime

We needed "many servers to act like one, and run many containers"

What and why of orchestration

There are many computing orchestrators

They make decisions about when and where to "do work"

We've done this since the dawn of computing: Mainframe schedulers, Puppet, Terraform, AWS, Mesos, Hadoop, etc.

Since 2014 we've had a resurgence of new orchestration projects because:

Popularity of distributed computing

Docker containers as a app package and isolated runtime

We needed "many servers to act like one, and run many containers"

And the Container Orchestrator was born

Container orchestrator

Many open source projects have been created in the last 5 years to:

- Schedule running of containers on servers

Container orchestrator

Many open source projects have been created in the last 5 years to:

Schedule running of containers on servers

Dispatch them across many nodes

Container orchestrator

Many open source projects have been created in the last 5 years to:

Schedule running of containers on servers

Dispatch them across many nodes

Monitor and react to container and server health

Container orchestrator

Many open source projects have been created in the last 5 years to:

Schedule running of containers on servers

Dispatch them across many nodes

Monitor and react to container and server health

Provide storage, networking, proxy, security, and logging features

Container orchestrator

Many open source projects have been created in the last 5 years to:

Schedule running of containers on servers

Dispatch them across many nodes

Monitor and react to container and server health

Provide storage, networking, proxy, security, and logging features

Do all this in a declarative way, rather than imperative

Container orchestrator

Many open source projects have been created in the last 5 years to:

Schedule running of containers on servers

Dispatch them across many nodes

Monitor and react to container and server health

Provide storage, networking, proxy, security, and logging features

Do all this in a declarative way, rather than imperative

Provide API's to allow extensibility and management

Major container orchestration projects

Kubernetes, aka K8s

Docker Swarm (and Swarm classic)

Apache Mesos/Marathon

Cloud Foundry

Amazon ECS (not OSS, AWS-only)

HashiCorp Nomad

Major container orchestration projects

Kubernetes, aka K8s

Docker Swarm (and Swarm classic)

Apache Mesos/Marathon

Cloud Foundry

Amazon ECS (not OSS, AWS-only)

HashiCorp Nomad

- Many of these tools run on top of Docker Engine

Major container orchestration projects

Kubernetes, aka K8s

Docker Swarm (and Swarm classic)

Apache Mesos/Marathon

Cloud Foundry

Amazon ECS (not OSS, AWS-only)

HashiCorp Nomad

Many of these tools run on top of Docker Engine

Kubernetes is the one orchestrator with many distributions

Kubernetes distributions

- Kubernetes "vanilla upstream" (not a distribution)

Kubernetes distributions

Kubernetes "vanilla upstream" (not a distribution)

Cloud-Managed distros: AKS, GKE, EKS, DOK...

Kubernetes distributions

Kubernetes "vanilla upstream" (not a distribution)

Cloud-Managed distros: AKS, GKE, EKS, DOK...

Self-Managed distros: RedHat OpenShift, Docker Enterprise, Rancher, Canonical Charmed, openSUSE Kubic...

Kubernetes distributions

Kubernetes "vanilla upstream" (not a distribution)

Cloud-Managed distros: AKS, GKE, EKS, DOK...

Self-Managed distros: RedHat OpenShift, Docker Enterprise, Rancher, Canonical Charmed, openSUSE Kubic...

Vanilla installers: kubeadm, kops, kubicorn...

Kubernetes distributions

Kubernetes "vanilla upstream" (not a distribution)

Cloud-Managed distros: AKS, GKE, EKS, DOK...

Self-Managed distros: RedHat OpenShift, Docker Enterprise, Rancher, Canonical Charmed, openSUSE Kubic...

Vanilla installers: kubeadm, kops, kubicorn...

Local dev/test: Docker Desktop, minikube, microK8s

Kubernetes distributions

Kubernetes "vanilla upstream" (not a distribution)

Cloud-Managed distros: AKS, GKE, EKS, DOK...

Self-Managed distros: RedHat OpenShift, Docker Enterprise, Rancher, Canonical Charmed, openSUSE Kubic...

Vanilla installers: kubeadm, kops, kubicorn...

Local dev/test: Docker Desktop, minikube, microK8s

CI testing: kind

Kubernetes distributions

Kubernetes "vanilla upstream" (not a distribution)

Cloud-Managed distros: AKS, GKE, EKS, DOK...

Self-Managed distros: RedHat OpenShift, Docker Enterprise, Rancher, Canonical Charmed, openSUSE Kubic...

Vanilla installers: kubeadm, kops, kubicorn...

Local dev/test: Docker Desktop, minikube, microK8s

CI testing: kind

Special builds: Rancher k3s

Kubernetes distributions

Kubernetes "vanilla upstream" (not a distribution)

Cloud-Managed distros: AKS, GKE, EKS, DOK...

Self-Managed distros: RedHat OpenShift, Docker Enterprise, Rancher, Canonical Charmed, openSUSE Kubic...

Vanilla installers: kubeadm, kops, kubicorn...

Local dev/test: Docker Desktop, minikube, microK8s

CI testing: kind

Special builds: Rancher k3s

And Many, many more... (86 as of June 2019)

Kubernetes concepts

(automatically generated title slide)

Kubernetes concepts

Kubernetes is a container management system

It runs and manages containerized applications on a cluster (one or more servers)

Often this is simply called "container orchestration"

Sometimes shortened to Kube or K8s ("Kay-eights" or "Kates")

Basic things we can ask Kubernetes to do

Basic things we can ask Kubernetes to do

- Start 5 containers using image

atseashop/api:v1.3

Basic things we can ask Kubernetes to do

Start 5 containers using image

atseashop/api:v1.3Place an internal load balancer in front of these containers

Basic things we can ask Kubernetes to do

Start 5 containers using image

atseashop/api:v1.3Place an internal load balancer in front of these containers

Start 10 containers using image

atseashop/webfront:v1.3

Basic things we can ask Kubernetes to do

Start 5 containers using image

atseashop/api:v1.3Place an internal load balancer in front of these containers

Start 10 containers using image

atseashop/webfront:v1.3Place a public load balancer in front of these containers

Basic things we can ask Kubernetes to do

Start 5 containers using image

atseashop/api:v1.3Place an internal load balancer in front of these containers

Start 10 containers using image

atseashop/webfront:v1.3Place a public load balancer in front of these containers

It's Black Friday (or Christmas), traffic spikes, grow our cluster and add containers

Basic things we can ask Kubernetes to do

Start 5 containers using image

atseashop/api:v1.3Place an internal load balancer in front of these containers

Start 10 containers using image

atseashop/webfront:v1.3Place a public load balancer in front of these containers

It's Black Friday (or Christmas), traffic spikes, grow our cluster and add containers

New release! Replace my containers with the new image

atseashop/webfront:v1.4

Basic things we can ask Kubernetes to do

Start 5 containers using image

atseashop/api:v1.3Place an internal load balancer in front of these containers

Start 10 containers using image

atseashop/webfront:v1.3Place a public load balancer in front of these containers

It's Black Friday (or Christmas), traffic spikes, grow our cluster and add containers

New release! Replace my containers with the new image

atseashop/webfront:v1.4Keep processing requests during the upgrade; update my containers one at a time

Other things that Kubernetes can do for us

Basic autoscaling

Blue/green deployment, canary deployment

Long running services, but also batch (one-off) and CRON-like jobs

Overcommit our cluster and evict low-priority jobs

Run services with stateful data (databases etc.)

Fine-grained access control defining what can be done by whom on which resources

Integrating third party services (service catalog)

Automating complex tasks (operators)

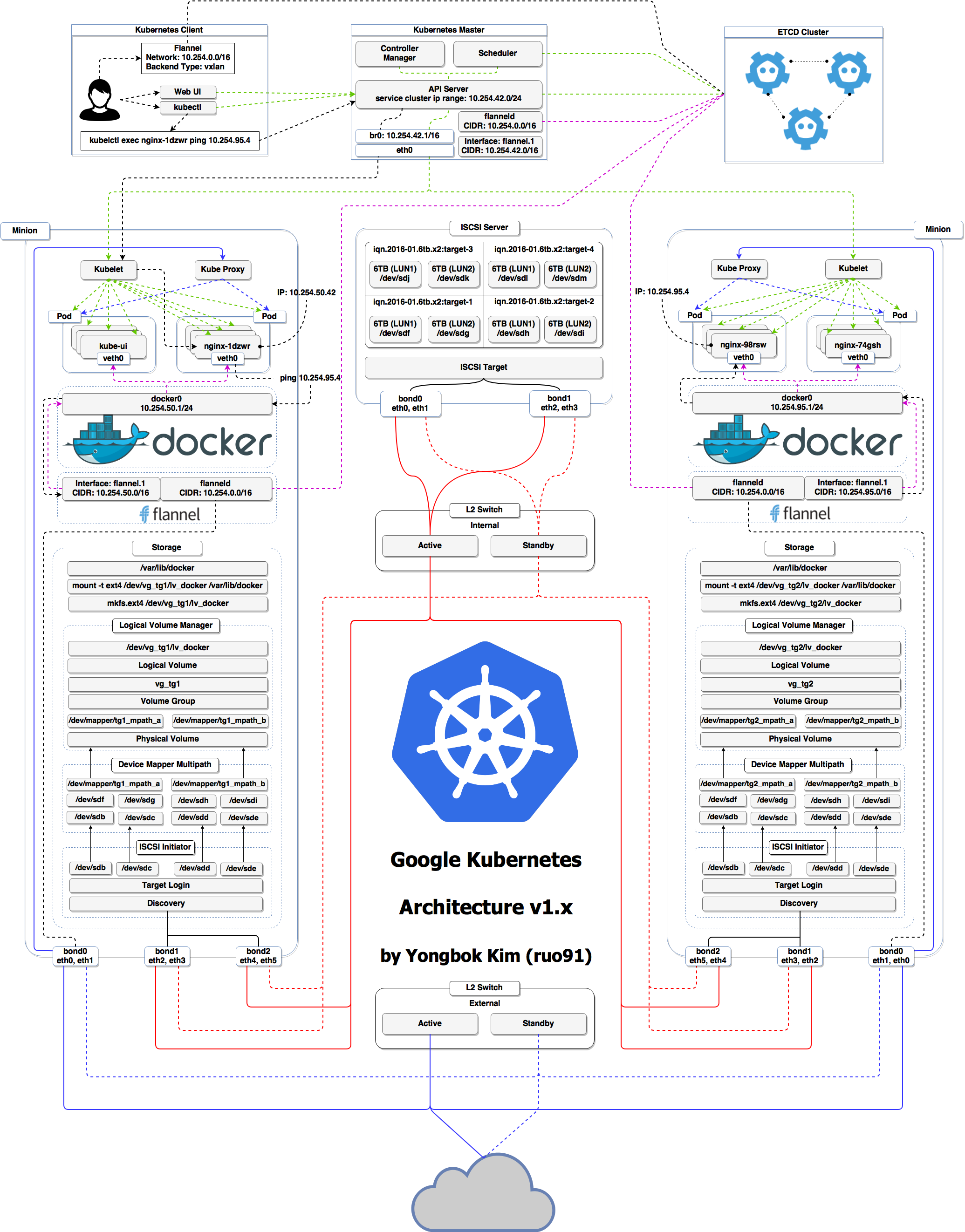

Kubernetes architecture

Ha ha ha ha

OK, I was trying to scare you, it's much simpler than that ❤️

Credits

The first schema is a Kubernetes cluster with storage backed by multi-path iSCSI

(Courtesy of Yongbok Kim)

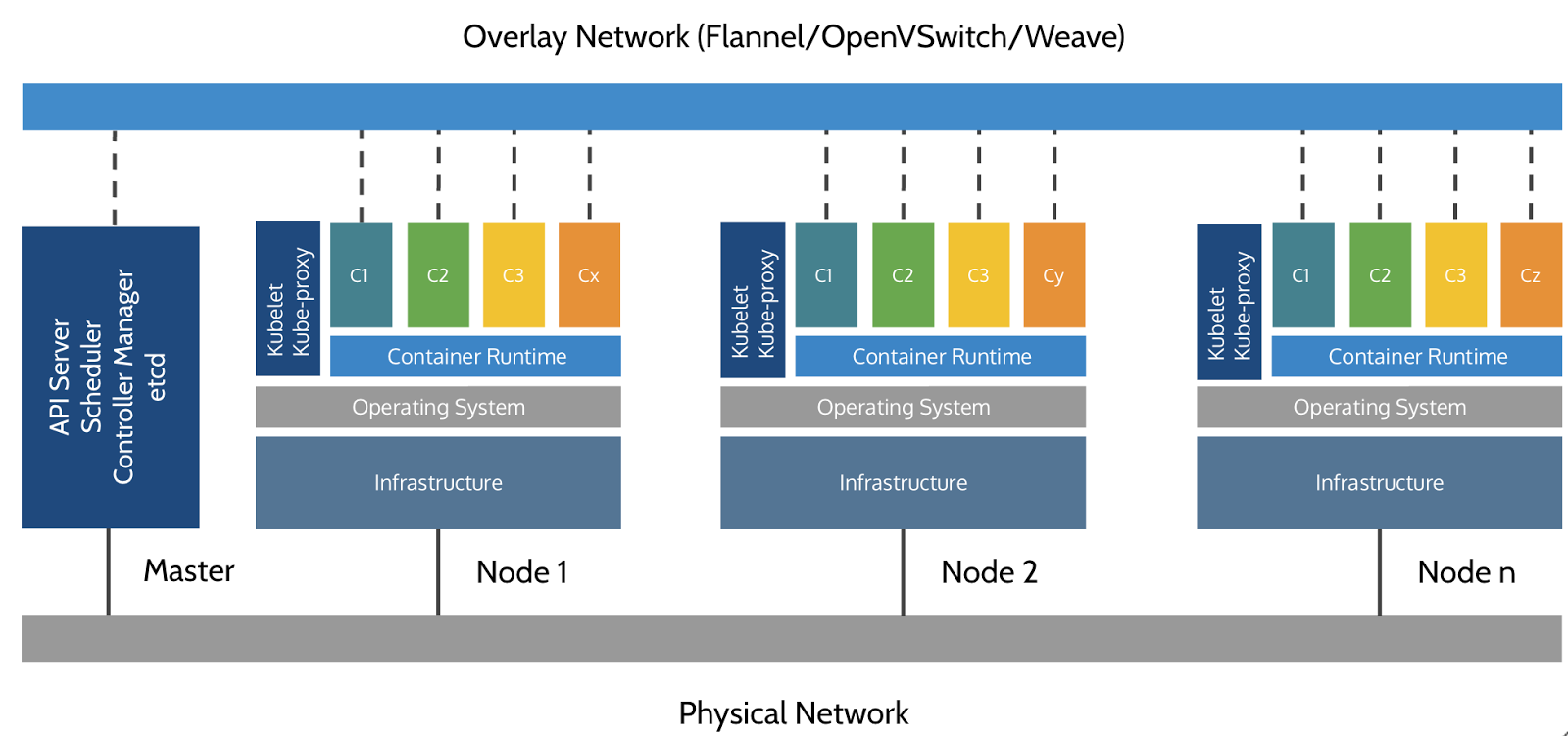

The second one is a simplified representation of a Kubernetes cluster

(Courtesy of Imesh Gunaratne)

Kubernetes architecture: the nodes

The nodes executing our containers run a collection of services:

a container Engine (typically Docker)

kubelet (the "node agent")

kube-proxy (a necessary but not sufficient network component)

Nodes were formerly called "minions"

(You might see that word in older articles or documentation)

Kubernetes architecture: the control plane

The Kubernetes logic (its "brains") is a collection of services:

the API server (our point of entry to everything!)

core services like the scheduler and controller manager

etcd(a highly available key/value store; the "database" of Kubernetes)

Together, these services form the control plane of our cluster

The control plane is also called the "master"

Running the control plane on special nodes

It is common to reserve a dedicated node for the control plane

(Except for single-node development clusters, like when using minikube)

This node is then called a "master"

(Yes, this is ambiguous: is the "master" a node, or the whole control plane?)

Normal applications are restricted from running on this node

(By using a mechanism called "taints")

When high availability is required, each service of the control plane must be resilient

The control plane is then replicated on multiple nodes

(This is sometimes called a "multi-master" setup)

Running the control plane outside containers

The services of the control plane can run in or out of containers

For instance: since

etcdis a critical service, some people deploy it directly on a dedicated cluster (without containers)(This is illustrated on the first "super complicated" schema)

In some hosted Kubernetes offerings (e.g. AKS, GKE, EKS), the control plane is invisible

(We only "see" a Kubernetes API endpoint)

In that case, there is no "master node"

For this reason, it is more accurate to say "control plane" rather than "master."

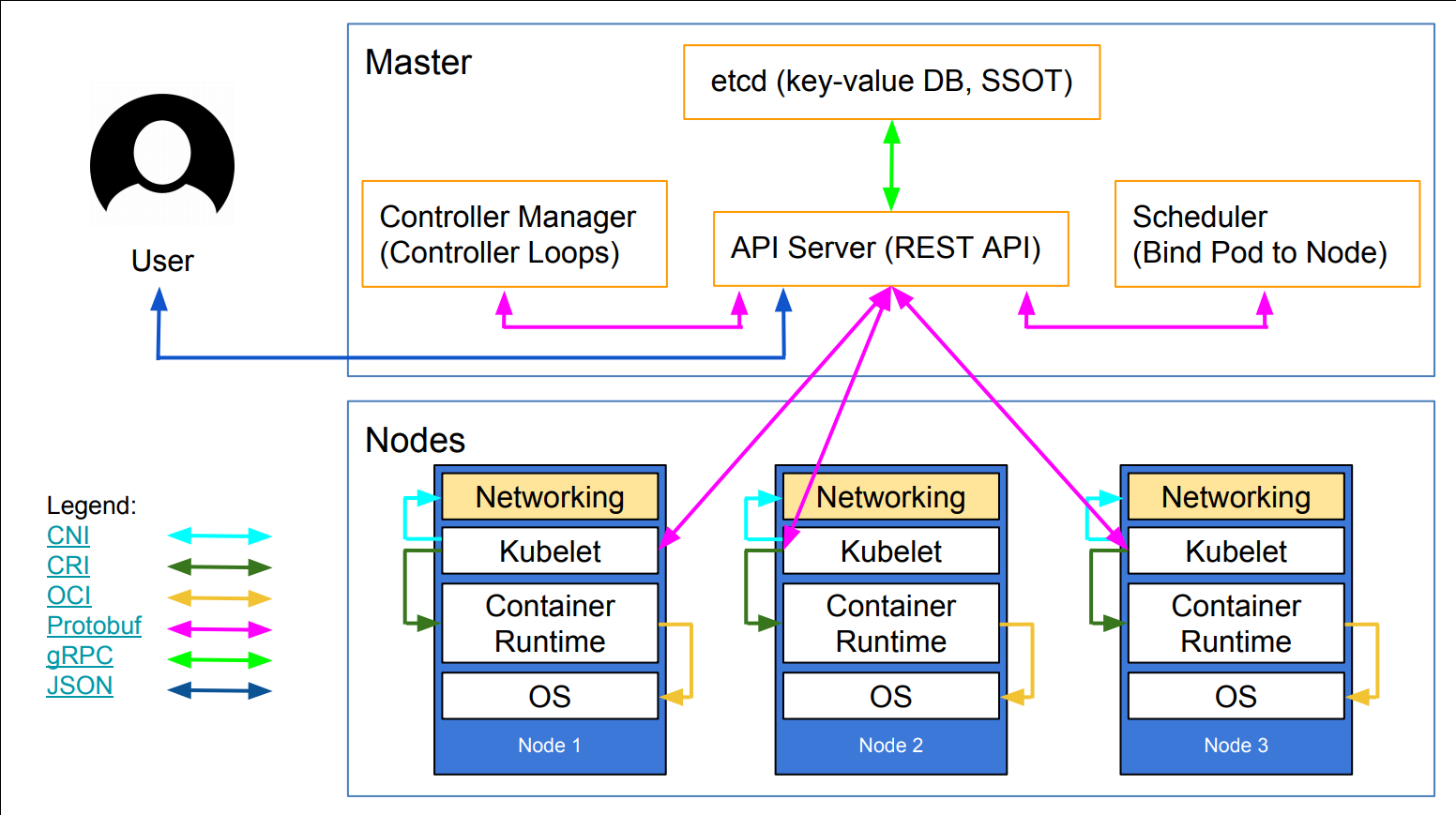

Do we need to run Docker at all?

No!

Do we need to run Docker at all?

No!

- By default, Kubernetes uses the Docker Engine to run containers

Do we need to run Docker at all?

No!

By default, Kubernetes uses the Docker Engine to run containers

Or leverage other pluggable runtimes through the Container Runtime Interface

Do we need to run Docker at all?

No!

By default, Kubernetes uses the Docker Engine to run containers

Or leverage other pluggable runtimes through the Container Runtime Interface

We could also use(deprecated)rkt("Rocket") from CoreOS

Do we need to run Docker at all?

No!

By default, Kubernetes uses the Docker Engine to run containers

Or leverage other pluggable runtimes through the Container Runtime Interface

We could also use(deprecated)rkt("Rocket") from CoreOScontainerd: maintained by Docker, IBM, and community

Used by Docker Engine, microK8s, k3s, GKE, and standalone; has

ctrCLI

Do we need to run Docker at all?

No!

By default, Kubernetes uses the Docker Engine to run containers

Or leverage other pluggable runtimes through the Container Runtime Interface

We could also use(deprecated)rkt("Rocket") from CoreOScontainerd: maintained by Docker, IBM, and community

Used by Docker Engine, microK8s, k3s, GKE, and standalone; has

ctrCLICRI-O: maintained by Red Hat, SUSE, and community; based on containerd

Used by OpenShift and Kubic, version matched to Kubernetes

Do we need to run Docker at all?

No!

By default, Kubernetes uses the Docker Engine to run containers

Or leverage other pluggable runtimes through the Container Runtime Interface

We could also use(deprecated)rkt("Rocket") from CoreOScontainerd: maintained by Docker, IBM, and community

Used by Docker Engine, microK8s, k3s, GKE, and standalone; has

ctrCLICRI-O: maintained by Red Hat, SUSE, and community; based on containerd

Used by OpenShift and Kubic, version matched to Kubernetes

Do we need to run Docker at all?

Yes!

Do we need to run Docker at all?

Yes!

In this course, we'll run our apps on a single node first

We may need to build images and ship them around

We can do these things without Docker

(and get diagnosed with NIH¹ syndrome)Docker is still the most stable container engine today

(but other options are maturing very quickly)

Do we need to run Docker at all?

On our development environments, CI pipelines ... :

Yes, almost certainly

On our production servers:

Yes (today)

Probably not (in the future)

More information about CRI on the Kubernetes blog

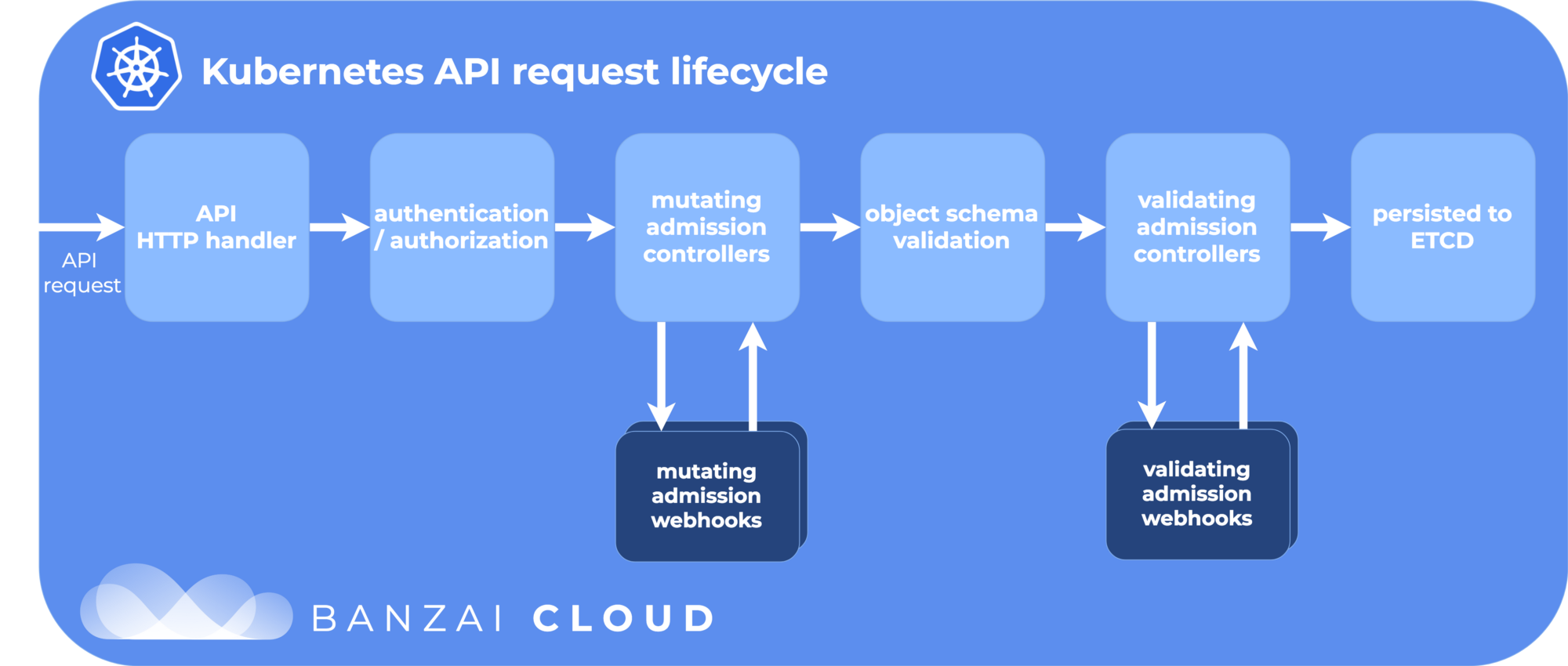

Interacting with Kubernetes

We will interact with our Kubernetes cluster through the Kubernetes API

The Kubernetes API is (mostly) RESTful

It allows us to create, read, update, delete resources

A few common resource types are:

node (a machine — physical or virtual — in our cluster)

pod (group of containers running together on a node)

service (stable network endpoint to connect to one or multiple containers)

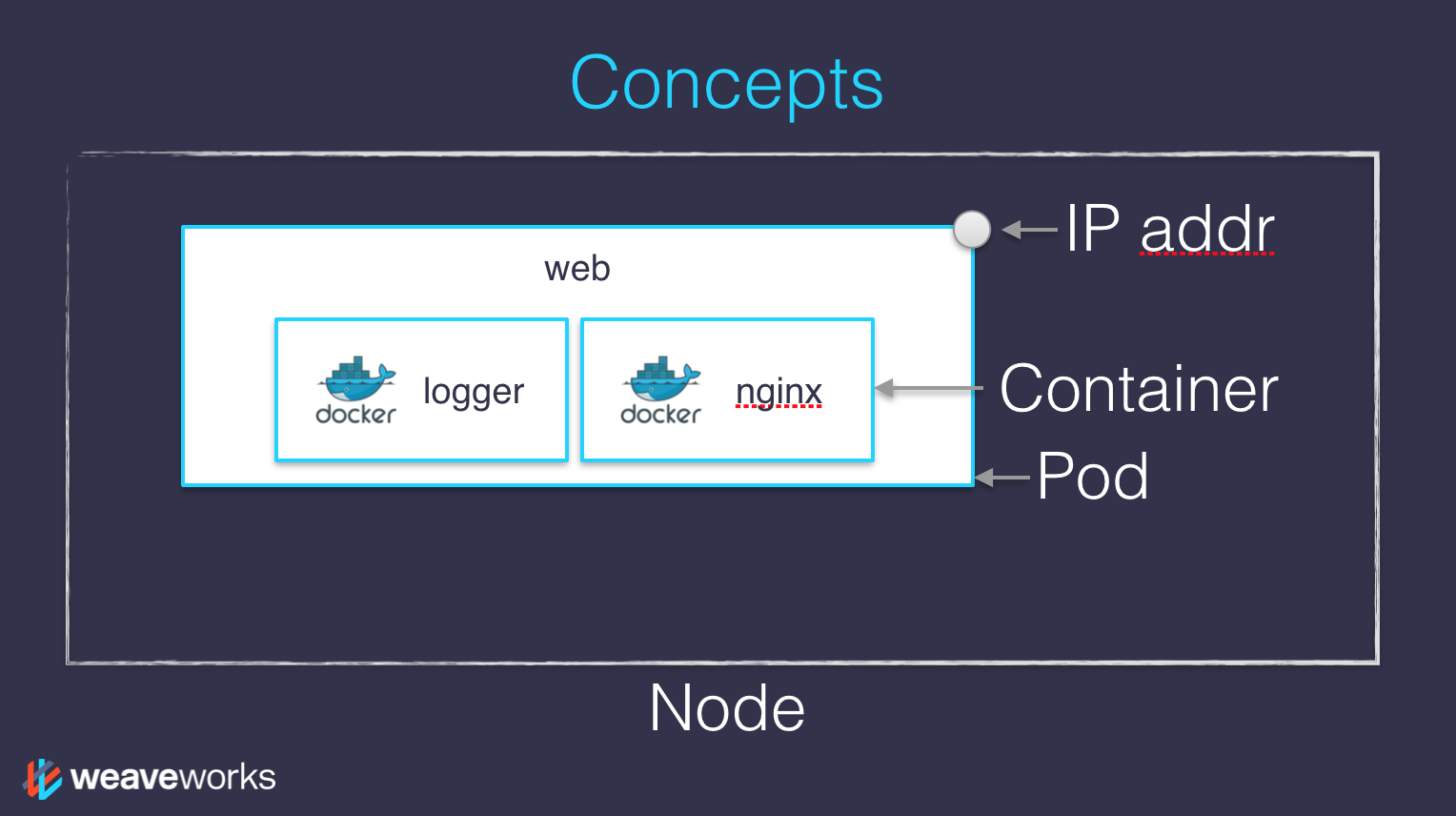

Pods

- Pods are a new abstraction!

Pods

Pods are a new abstraction!

A pod can have multiple containers working together

(But you usually only have on container per pod)

Pods

Pods are a new abstraction!

A pod can have multiple containers working together

(But you usually only have on container per pod)

Pod is our smallest deployable unit; Kubernetes can't mange containers directly

Pods

Pods are a new abstraction!

A pod can have multiple containers working together

(But you usually only have on container per pod)

Pod is our smallest deployable unit; Kubernetes can't mange containers directly

IP addresses are associated with pods, not with individual containers

Containers in a pod share

localhost, and can share volumes

Pods

Pods are a new abstraction!

A pod can have multiple containers working together

(But you usually only have on container per pod)

Pod is our smallest deployable unit; Kubernetes can't mange containers directly

IP addresses are associated with pods, not with individual containers

Containers in a pod share

localhost, and can share volumesMultiple containers in a pod are deployed together

In reality, Docker doesn't know a pod, only containers/namespaces/volumes k8smastery/concepts-k8s.md

Credits

The first diagram is courtesy of Lucas Käldström, in this presentation

- it's one of the best Kubernetes architecture diagrams available!

The second diagram is courtesy of Weaveworks

a pod can have multiple containers working together

IP addresses are associated with pods, not with individual containers

Both diagrams used with permission.

Getting a Kubernetes cluster for learning

(automatically generated title slide)

Getting a Kubernetes cluster for learning

Best: Get a environment locally

- Docker Desktop (Win/macOS/Linux), Rancher Desktop (Win/macOS/Linux), or microk8s (Linux)

- Small setup effort; free; flexible environments

- Requires 2GB+ of memory

Getting a Kubernetes cluster for learning

Best: Get a environment locally

- Docker Desktop (Win/macOS/Linux), Rancher Desktop (Win/macOS/Linux), or microk8s (Linux)

- Small setup effort; free; flexible environments

- Requires 2GB+ of memory

Good: Setup a cloud Linux host to run microk8s

- Great if you don't have the local resources to run Kubernetes

- Small setup effort; only free for a while

- My $50 DigitalOcean coupon lets you run Kubernetes free for a month

Getting a Kubernetes cluster for learning

Best: Get a environment locally

- Docker Desktop (Win/macOS/Linux), Rancher Desktop (Win/macOS/Linux), or microk8s (Linux)

- Small setup effort; free; flexible environments

- Requires 2GB+ of memory

Good: Setup a cloud Linux host to run microk8s

- Great if you don't have the local resources to run Kubernetes

- Small setup effort; only free for a while

- My $50 DigitalOcean coupon lets you run Kubernetes free for a month

Last choice: Use a browser-based solution

- Low setup effort; but host is short-lived and has limited resources

- Not all hands-on examples will work in the browser sandbox

Getting a Kubernetes cluster for learning

Best: Get a environment locally

- Docker Desktop (Win/macOS/Linux), Rancher Desktop (Win/macOS/Linux), or microk8s (Linux)

- Small setup effort; free; flexible environments

- Requires 2GB+ of memory

Good: Setup a cloud Linux host to run microk8s

- Great if you don't have the local resources to run Kubernetes

- Small setup effort; only free for a while

- My $50 DigitalOcean coupon lets you run Kubernetes free for a month

Last choice: Use a browser-based solution

- Low setup effort; but host is short-lived and has limited resources

- Not all hands-on examples will work in the browser sandbox

For all environments, we'll use

shpodcontainer for tools

Docker Desktop (Windows 10/macOS)

(automatically generated title slide)

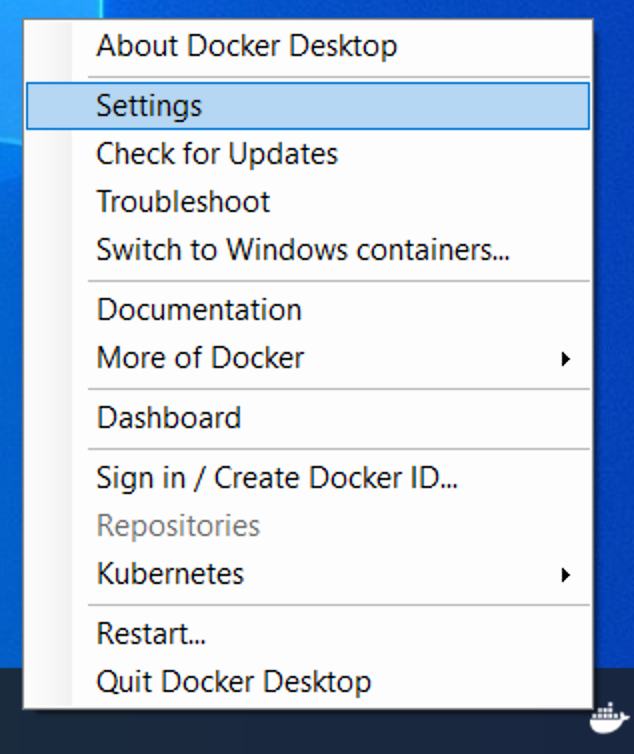

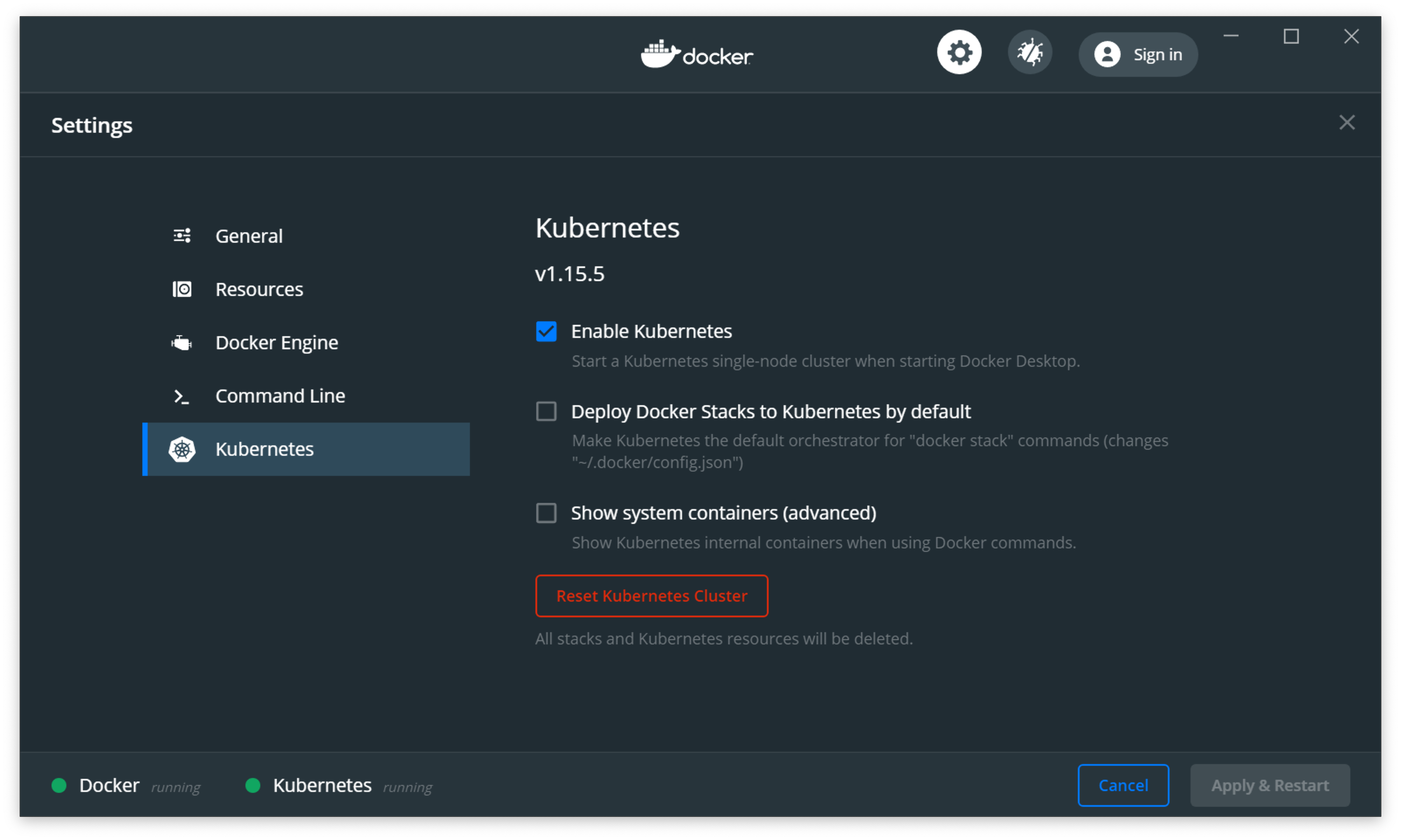

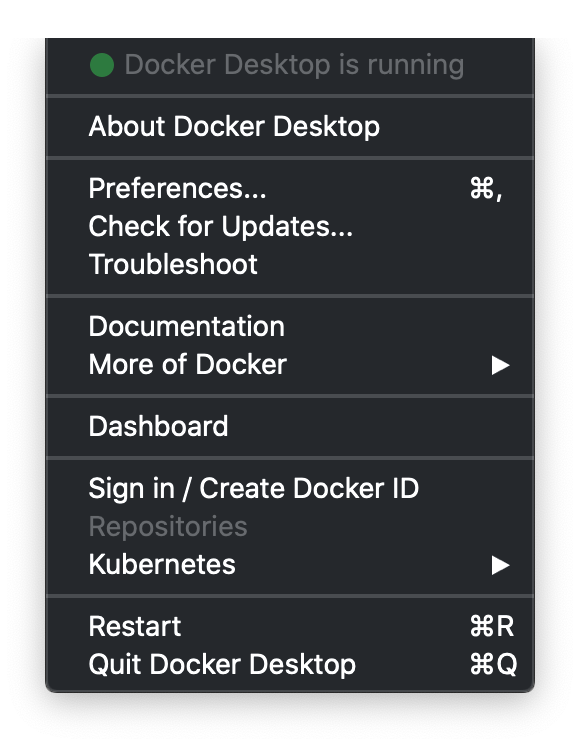

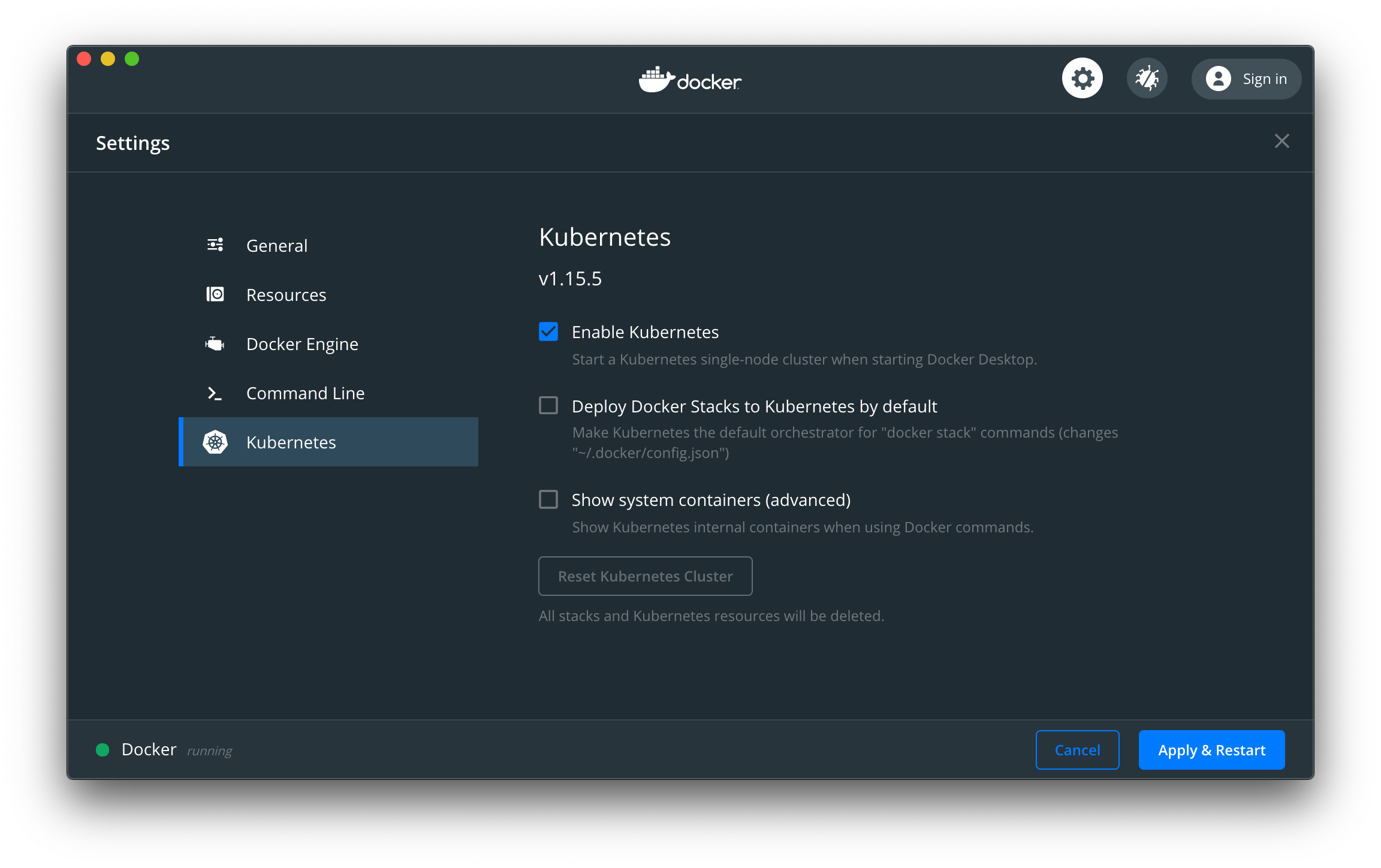

Docker Desktop (Windows 10/macOS)

- Docker Desktop (DD) is great for a local dev/test setup

Docker Desktop (Windows 10/macOS)

Docker Desktop (DD) is great for a local dev/test setup

Requires modern macOS or Windows 10 Pro/Ent/Edu (no Home)

- Requires Hyper-V, and disables VirtualBox

Docker Desktop (Windows 10/macOS)

Docker Desktop (DD) is great for a local dev/test setup

Requires modern macOS or Windows 10 Pro/Ent/Edu (no Home)

- Requires Hyper-V, and disables VirtualBox

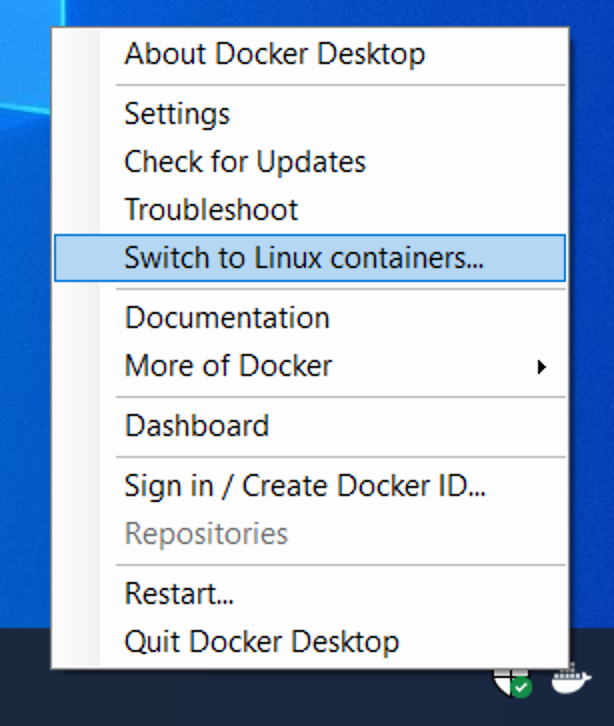

Download Windows or macOS versions and install

For Windows, ensure you pick "Linux Containers" mode

Once running, enabled Kubernetes in Settings/Preferences

minikube (Windows 10 Home)

(automatically generated title slide)

minikube (Windows 10 Home)

- A good local install option if you can't run Docker Desktop

minikube (Windows 10 Home)

A good local install option if you can't run Docker Desktop

Inspired by Docker Toolbox

- Will create a local VM and configure latest Kubernetes

- Has lots of other features with its

minikubeCLI

minikube (Windows 10 Home)

A good local install option if you can't run Docker Desktop

Inspired by Docker Toolbox

- Will create a local VM and configure latest Kubernetes

Has lots of other features with its

minikubeCLIBut, requires separate install of VirtualBox and kubectl

- May not work with older Windows versions (YMMV)

minikube (Windows 10 Home)

A good local install option if you can't run Docker Desktop

Inspired by Docker Toolbox

- Will create a local VM and configure latest Kubernetes

Has lots of other features with its

minikubeCLIBut, requires separate install of VirtualBox and kubectl

- May not work with older Windows versions (YMMV)

- Download and install VirtualBox

- Download kubectl, and add to $PATH

- Download and install minikube

- Run

minikube startto create and run a Kubernetes VM - Run

minikube stopwhen you're done

minikube (Windows 10 Home)

A good local install option if you can't run Docker Desktop

Inspired by Docker Toolbox

- Will create a local VM and configure latest Kubernetes

Has lots of other features with its

minikubeCLIBut, requires separate install of VirtualBox and kubectl

- May not work with older Windows versions (YMMV)

- Download and install VirtualBox

- Download kubectl, and add to $PATH

- Download and install minikube

- Run

minikube startto create and run a Kubernetes VM - Run

minikube stopwhen you're done

If you get an error about "This computer doesn't have VT-X/AMD-v enabled", you need to enable virtualization in your computer BIOS.

k8smastery/install-minikube.md

MicroK8s (Linux)

(automatically generated title slide)

MicroK8s (Linux)

Easy install and management of local Kubernetes

Made by Canonical (Ubuntu). Installs using

snap. Works nearly everywhere- Has lots of other features with its

microk8sCLI

MicroK8s (Linux)

Easy install and management of local Kubernetes

Made by Canonical (Ubuntu). Installs using

snap. Works nearly everywhereHas lots of other features with its

microk8sCLIBut, requires you install

snapif not on Ubuntu- Runs on containerd rather than Docker, no biggie

- Needs alias setup for

microk8s kubectl

MicroK8s (Linux)

Easy install and management of local Kubernetes

Made by Canonical (Ubuntu). Installs using

snap. Works nearly everywhereHas lots of other features with its

microk8sCLIBut, requires you install

snapif not on Ubuntu- Runs on containerd rather than Docker, no biggie

- Needs alias setup for

microk8s kubectl

- Install

microk8s,change group permissions, then set alias in bashrcsudo snap install microk8s --classicsudo usermod -a -G microk8s <username>echo "alias kubectl='microk8s kubectl'" >> ~/.bashrc# log out and back in if using a non-root user

k8smastery/install-microk8s.md

MicroK8s Additional Info

- We'll need these later (these are done for us in Docker Desktop and minikube):

Create kubectl config file

microk8s kubectl config view --raw > $HOME/.kube/configInstall CoreDNS in Kubernetes

sudo microk8s enable dns

- You can also install other plugins this way like

microk8s enable dashboardormicrok8s enable ingress

k8smastery/install-microk8s.md

MicroK8s Troubleshooting

- Run a check for any config problems

- Test MicroK8s config for any potental problemssudo microk8s inspect

If you also have Docker installed, you can ignore warnings about iptables and registries

See troubleshooting site if you have issues

k8smastery/install-microk8s.md

Web-based options

(automatically generated title slide)

Web-based options

Last choice: Use a browser-based solution

Web-based options

Last choice: Use a browser-based solution

- Low setup effort; but host is short-lived and has limited resources

Web-based options

Last choice: Use a browser-based solution

Low setup effort; but host is short-lived and has limited resources

Services are not always working right, and may not be up to date

Web-based options

Last choice: Use a browser-based solution

Low setup effort; but host is short-lived and has limited resources

Services are not always working right, and may not be up to date

Not all hands-on examples will work in the browser sandbox

- Use a prebuilt Kubernetes server at Katacoda

- Or setup a Kubernetes node at play-with-k8s.com

- Maybe try the latest OpenShift at learn.openshift.com

- See if instruqt works for a Kubernetes playground

shpod: For a consistent Kubernetes experience ...

(automatically generated title slide)

shpod: For a consistent Kubernetes experience ...

You can use shpod for examples

shpodprovides a shell running in a pod on the clusterIt comes with many tools pre-installed (helm, stern, curl, jq...)

These tools are used in many exercises in these slides

shpodalso gives you shell completion and a fancy promptCreate it with

kubectl apply -f https://k8smastery.com/shpod.yamlAttach to shell with

kubectl attach --namespace=shpod -ti shpodAfter finishing course

kubectl delete -f https://k8smastery.com/shpod.yaml

First contact with kubectl

(automatically generated title slide)

First contact with kubectl

kubectlis (almost) the only tool we'll need to talk to KubernetesIt is a rich CLI tool around the Kubernetes API

(Everything you can do with

kubectl, you can do directly with the API)

First contact with kubectl

kubectlis (almost) the only tool we'll need to talk to KubernetesIt is a rich CLI tool around the Kubernetes API

(Everything you can do with

kubectl, you can do directly with the API)On our machines, there is a

~/.kube/configfile with:the Kubernetes API address

the path to our TLS certificates used to authenticate

You can also use the

--kubeconfigflag to pass a config fileOr directly

--server,--user, etc.

First contact with kubectl

kubectlis (almost) the only tool we'll need to talk to KubernetesIt is a rich CLI tool around the Kubernetes API

(Everything you can do with

kubectl, you can do directly with the API)On our machines, there is a

~/.kube/configfile with:the Kubernetes API address

the path to our TLS certificates used to authenticate

You can also use the

--kubeconfigflag to pass a config fileOr directly

--server,--user, etc.kubectlcan be pronounced "Cube C T L", "Cube cuttle", "Cube cuddle"...I'll be using the official name "Cube Control" 😎

kubectl is the new SSH

We often start managing servers with SSH

(installing packages, troubleshooting ...)

At scale, it becomes tedious, repetitive, error-prone

Instead, we use config management, central logging, etc.

In many cases, we still need SSH:

as the underlying access method (e.g. Ansible)

to debug tricky scenarios

to inspect and poke at things

The parallel with kubectl

We often start managing Kubernetes clusters with

kubectl(deploying applications, troubleshooting ...)

At scale (with many applications or clusters), it becomes tedious, repetitive, error-prone

Instead, we use automated pipelines, observability tooling, etc.

In many cases, we still need

kubectl:to debug tricky scenarios

to inspect and poke at things

The Kubernetes API is always the underlying access method

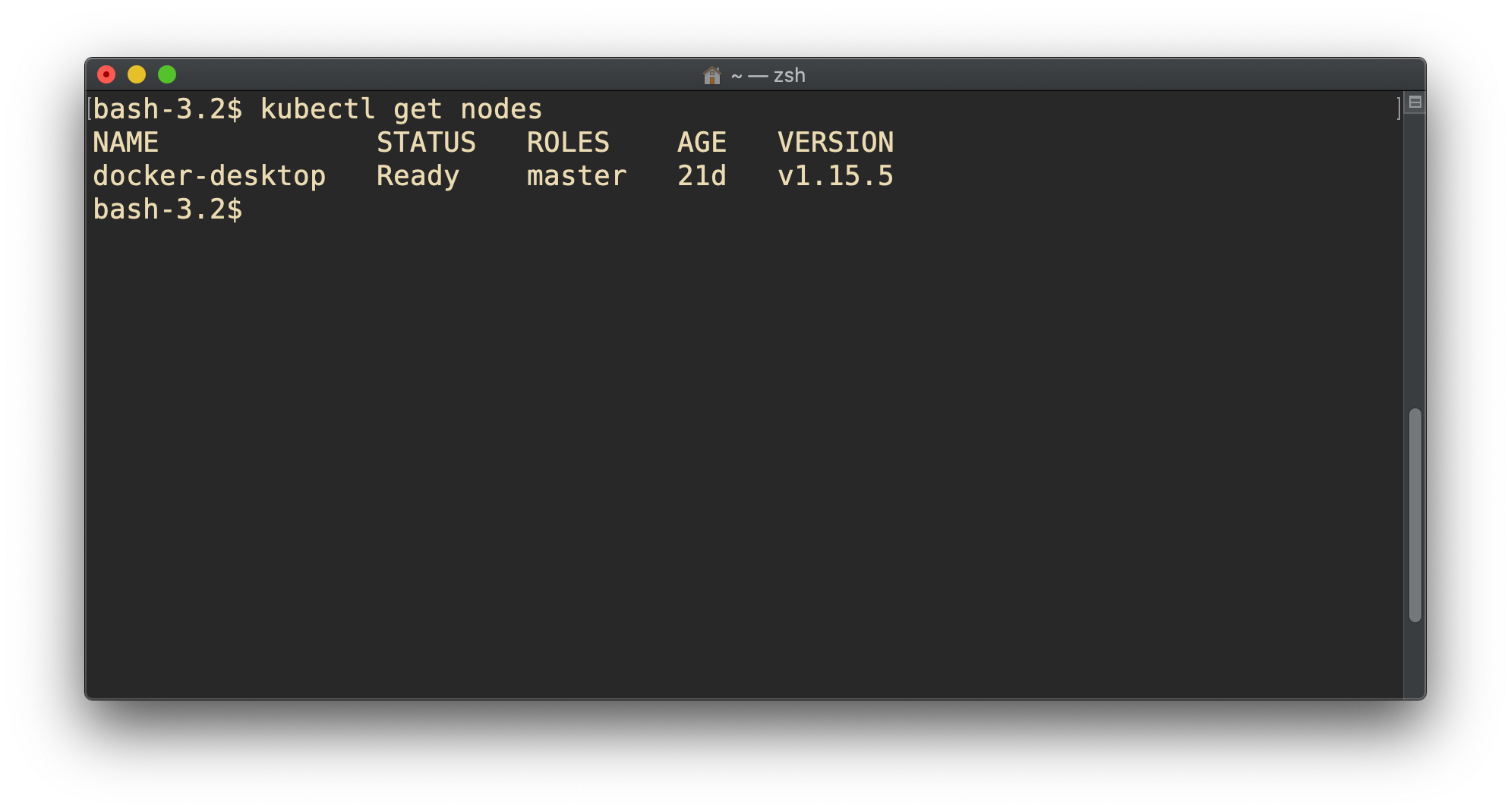

kubectl get

- Let's look at our

Noderesources withkubectl get!

Look at the composition of our cluster:

kubectl get nodeThese commands are equivalent:

kubectl get nokubectl get nodekubectl get nodes

Obtaining machine-readable output

kubectl getcan output JSON, YAML, or be directly formatted

Give us more info about the nodes:

kubectl get nodes -o wideLet's have some YAML:

kubectl get no -o yamlSee that

kind: Listat the end? It's the type of our result!

(Ab)using kubectl and jq

- It's super easy to build custom reports

- Show the capacity of all our nodes as a stream of JSON objects:kubectl get nodes -o json |jq ".items[] | {name:.metadata.name} + .status.capacity"

Viewing details

We can use

kubectl get -o yamlto see all available detailsHowever, YAML output is often simultaneously too much and not enough

For instance,

kubectl get node node1 -o yamlis:too much information (e.g.: list of images available on this node)

not enough information (e.g.: doesn't show pods running on this node)

difficult to read for a human operator

For a comprehensive overview, we can use

kubectl describeinstead

kubectl describe

kubectl describeneeds a resource type and (optionally) a resource nameIt is possible to provide a resource name prefix

(all matching objects will be displayed)

kubectl describewill retrieve some extra information about the resource

- Look at the information available for your node name with one of the following:kubectl describe node/<node>kubectl describe node <node>

(We should notice a bunch of control plane pods.)

Exploring types and definitions

We can list all available resource types by running

kubectl api-resources

(In Kubernetes 1.10 and prior, this command used to bekubectl get)We can view the definition for a resource type with:

kubectl explain typeWe can view the definition of a field in a resource, for instance:

kubectl explain node.specOr get the list of all fields and sub-fields:

kubectl explain node --recursive

Introspection vs. documentation

We can access the same information by reading the API documentation

The API documentation is usually easier to read, but:

it won't show custom types (like Custom Resource Definitions)

we need to make sure that we look at the correct version

kubectl api-resourcesandkubectl explainperform introspection(they communicate with the API server and obtain the exact type definitions)

Type names

The most common resource names have three forms:

singular (e.g.

node,service,deployment)plural (e.g.

nodes,services,deployments)short (e.g.

no,svc,deploy)

Some resources do not have a short name

Endpointsonly have a plural form(because even a single

Endpointsresource is actually a list of endpoints)

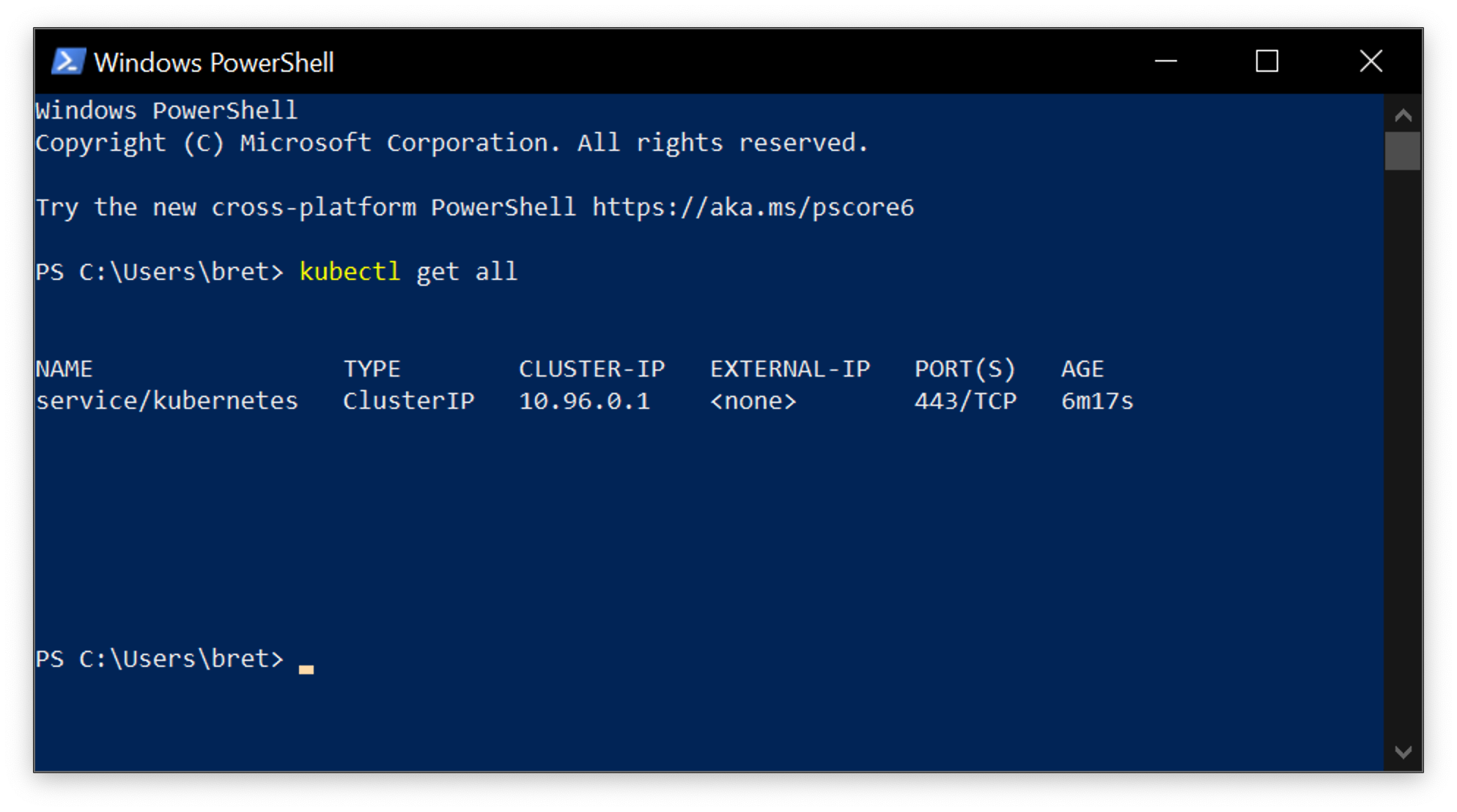

More get commands: Services

A service is a stable endpoint to connect to "something"

(In the initial proposal, they were called "portals")

- List the services on our cluster with one of these commands:kubectl get serviceskubectl get svc

More get commands: Services

A service is a stable endpoint to connect to "something"

(In the initial proposal, they were called "portals")

- List the services on our cluster with one of these commands:kubectl get serviceskubectl get svc

There is already one service on our cluster: the Kubernetes API itself.

More get commands: Listing running containers

Containers are manipulated through pods

A pod is a group of containers:

running together (on the same node)

sharing resources (RAM, CPU; but also network, volumes)

- List pods on our cluster:kubectl get pods

More get commands: Listing running containers

Containers are manipulated through pods

A pod is a group of containers:

running together (on the same node)

sharing resources (RAM, CPU; but also network, volumes)

- List pods on our cluster:kubectl get pods

Where are the pods that we saw just a moment earlier?!?

Namespaces

- Namespaces allow us to segregate resources

- List the namespaces on our cluster with one of these commands:kubectl get namespaceskubectl get namespacekubectl get ns

Namespaces

- Namespaces allow us to segregate resources

- List the namespaces on our cluster with one of these commands:kubectl get namespaceskubectl get namespacekubectl get ns

You know what ... This kube-system thing looks suspicious.

In fact, I'm pretty sure it showed up earlier, when we did:

kubectl describe node <node-name>

Accessing namespaces

By default,

kubectluses thedefaultnamespaceWe can see resources in all namespaces with

--all-namespaces

List the pods in all namespaces:

kubectl get pods --all-namespacesSince Kubernetes 1.14, we can also use

-Aas a shorter version:kubectl get pods -A

Here are our system pods!

What are all these control plane pods?

etcdis our etcd serverkube-apiserveris the API serverkube-controller-managerandkube-schedulerare other control plane componentscorednsprovides DNS-based service discovery (replacing kube-dns as of 1.11)kube-proxyis the (per-node) component managing port mappings and such<net name>is the optional (per-node) component managing the network overlaythe

READYcolumn indicates the number of containers in each podNote: this only shows containers, you won't see host svcs (e.g. microk8s)

Also Note: you may see different namespaces depending on setup

Scoping another namespace

- We can also look at a different namespace (other than

default)

- List only the pods in the

kube-systemnamespace:kubectl get pods --namespace=kube-systemkubectl get pods -n kube-system

Namespaces and other kubectl commands

We can use

-n/--namespacewith almost everykubectlcommandExample:

kubectl create --namespace=Xto create something in namespace X

We can use

-A/--all-namespaceswith most commands that manipulate multiple objectsExamples:

kubectl deletecan delete resources across multiple namespaceskubectl labelcan add/remove/update labels across multiple namespaces

What about kube-public?

- List the pods in the

kube-publicnamespace:kubectl -n kube-public get pods

Nothing!

kube-public is created by our installer & used for security bootstrapping.

Exploring kube-public

- The only interesting object in

kube-publicis a ConfigMap namedcluster-info

List ConfigMap objects:

kubectl -n kube-public get configmapsInspect

cluster-info:kubectl -n kube-public get configmap cluster-info -o yaml

Note the selfLink URI: /api/v1/namespaces/kube-public/configmaps/cluster-info

We can use that (later in kubectl context lectures)!

What about kube-node-lease?

Starting with Kubernetes 1.14, there is a

kube-node-leasenamespace(or in Kubernetes 1.13 if the NodeLease feature gate is enabled)

That namespace contains one Lease object per node

Node leases are a new way to implement node heartbeats

(i.e. node regularly pinging the control plane to say "I'm alive!")

For more details, see KEP-0009 or the node controller documentation k8s/kubectlget.md

Services

A service is a stable endpoint to connect to "something"

(In the initial proposal, they were called "portals")

- List the services on our cluster with one of these commands:kubectl get serviceskubectl get svc

Services

A service is a stable endpoint to connect to "something"

(In the initial proposal, they were called "portals")

- List the services on our cluster with one of these commands:kubectl get serviceskubectl get svc

There is already one service on our cluster: the Kubernetes API itself.

ClusterIP services

A

ClusterIPservice is internal, available from the cluster onlyThis is useful for introspection from within containers

Try to connect to the API:

curl -k https://10.96.0.1-kis used to skip certificate verificationMake sure to replace 10.96.0.1 with the CLUSTER-IP shown by

kubectl get svc

The command above should either time out, or show an authentication error. Why?

Time out

Connections to ClusterIP services only work from within the cluster

If we are outside the cluster, the

curlcommand will probably time out(Because the IP address, e.g. 10.96.0.1, isn't routed properly outside the cluster)

This is the case with most "real" Kubernetes clusters

To try the connection from within the cluster, we can use shpod

Authentication error

This is what we should see when connecting from within the cluster:

$ curl -k https://10.96.0.1{ "kind": "Status", "apiVersion": "v1", "metadata": { }, "status": "Failure", "message": "forbidden: User \"system:anonymous\" cannot get path \"/\"", "reason": "Forbidden", "details": { }, "code": 403}Explanations

We can see

kind,apiVersion,metadataThese are typical of a Kubernetes API reply

Because we are talking to the Kubernetes API

The Kubernetes API tells us "Forbidden"

(because it requires authentication)

The Kubernetes API is reachable from within the cluster

(many apps integrating with Kubernetes will use this)

DNS integration

Each service also gets a DNS record

The Kubernetes DNS resolver is available from within pods

(and sometimes, from within nodes, depending on configuration)

Code running in pods can connect to services using their name

(e.g. https://kubernetes/...)

Running our first containers on Kubernetes

(automatically generated title slide)

Running our first containers on Kubernetes

- First things first: we cannot run a container

Running our first containers on Kubernetes

First things first: we cannot run a container

We are going to run a pod, and in that pod there will be a single container

Running our first containers on Kubernetes

First things first: we cannot run a container

We are going to run a pod, and in that pod there will be a single container

In that container in the pod, we are going to run a simple

pingcommandThen we are going to start additional copies of the pod

Starting a simple pod with kubectl run

- We need to specify at least a name and the image we want to use

- Let's ping the address of

localhost, the loopback interface:kubectl run pingpong --image alpine ping 127.0.0.1

Starting a simple pod with kubectl run

- We need to specify at least a name and the image we want to use

- Let's ping the address of

localhost, the loopback interface:kubectl run pingpong --image alpine ping 127.0.0.1

(Starting with Kubernetes 1.12, we get a message telling us that

kubectl run is deprecated. Let's ignore it for now.)

Behind the scenes of kubectl run

- Let's look at the resources that were created by

kubectl run

- List most resource types:kubectl get all

Behind the scenes of kubectl run

- Let's look at the resources that were created by

kubectl run

- List most resource types:kubectl get all

We should see the following things:

deployment.apps/pingpong(the deployment that we just created)replicaset.apps/pingpong-xxxxxxxxxx(a replica set created by the deployment)pod/pingpong-xxxxxxxxxx-yyyyy(a pod created by the replica set)

Note: as of 1.10.1, resource types are displayed in more detail.

What are these different things?

A deployment is a high-level construct

allows scaling, rolling updates, rollbacks

multiple deployments can be used together to implement a canary deployment

delegates pods management to replica sets

A replica set is a low-level construct

makes sure that a given number of identical pods are running

allows scaling

rarely used directly

Note: A replication controller is the deprecated predecessor of a replica set

Our pingpong deployment

kubectl runcreated a deployment,deployment.apps/pingpong

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGEdeployment.apps/pingpong 1 1 1 1 10m- That deployment created a replica set,

replicaset.apps/pingpong-xxxxxxxxxx

NAME DESIRED CURRENT READY AGEreplicaset.apps/pingpong-7c8bbcd9bc 1 1 1 10m- That replica set created a pod,

pod/pingpong-xxxxxxxxxx-yyyyy

NAME READY STATUS RESTARTS AGEpod/pingpong-7c8bbcd9bc-6c9qz 1/1 Running 0 10mWe'll see later how these folks play together for:

- scaling, high availability, rolling updates

Viewing container output

Let's use the

kubectl logscommandWe will pass either a pod name, or a type/name

(E.g. if we specify a deployment or replica set, it will get the first pod in it)

Unless specified otherwise, it will only show logs of the first container in the pod

(Good thing there's only one in ours!)

- View the result of our

pingcommand:kubectl logs deploy/pingpong

Streaming logs in real time

Just like

docker logs,kubectl logssupports convenient options:-f/--followto stream logs in real time (à latail -f)--tailto indicate how many lines you want to see (from the end)--sinceto get logs only after a given timestamp

View the latest logs of our

pingcommand:kubectl logs deploy/pingpong --tail 1 --followLeave that command running, so that we can keep an eye on these logs

Scaling our application

- We can create additional copies of our container (I mean, our pod) with

kubectl scale

Scale our

pingpongdeployment:kubectl scale deploy/pingpong --replicas 3Note that this command does exactly the same thing:

kubectl scale deployment pingpong --replicas 3

Note: what if we tried to scale replicaset.apps/pingpong-xxxxxxxxxx?

We could! But the deployment would notice it right away, and scale back to the initial level.

Log streaming

Let's look again at the output of

kubectl logs(the one we started before scaling up)

kubectl logsshows us one line per secondWe could expect 3 lines per second

(since we should now have 3 pods running

ping)Let's try to figure out what's happening!

Streaming logs of multiple pods

- What happens if we restart

kubectl logs?

- Interrupt

kubectl logs(with Ctrl-C)

- Restart it:kubectl logs deploy/pingpong --tail 1 --follow

kubectl logs will warn us that multiple pods were found, and that it's showing us only one of them.

Let's leave kubectl logs running while we keep exploring.

Resilience

The deployment

pingpongwatches its replica setThe replica set ensures that the right number of pods are running

What happens if pods disappear?

- In a separate window, watch the list of pods:watch kubectl get pods

- Destroy the pod currently shown by

kubectl logs:kubectl delete pod pingpong-xxxxxxxxxx-yyyyy

What happened?

kubectl delete podterminates the pod gracefully(sending it the TERM signal and waiting for it to shutdown)

As soon as the pod is in "Terminating" state, the Replica Set replaces it

But we can still see the output of the "Terminating" pod in

kubectl logsUntil 30 seconds later, when the grace period expires

The pod is then killed, and

kubectl logsexits

What if we wanted something different?

What if we wanted to start a "one-shot" container that doesn't get restarted?

We could use

kubectl run --restart=OnFailureorkubectl run --restart=NeverThese commands would create jobs or pods instead of deployments

Under the hood,

kubectl runinvokes "generators" to create resource descriptionsWe could also write these resource descriptions ourselves (typically in YAML),

and create them on the cluster withkubectl apply -f(discussed later)With

kubectl run --schedule=..., we can also create cronjobs

Scheduling periodic background work

A Cron Job is a job that will be executed at specific intervals

(the name comes from the traditional cronjobs executed by the UNIX crond)

It requires a schedule, represented as five space-separated fields:

- minute [0,59]

- hour [0,23]

- day of the month [1,31]

- month of the year [1,12]

- day of the week ([0,6] with 0=Sunday)

*means "all valid values";/Nmeans "every N"Example:

*/3 * * * *means "every three minutes"

Creating a Cron Job

Let's create a simple job to be executed every three minutes

Cron Jobs need to terminate, otherwise they'd run forever

Create the Cron Job:

kubectl run every3mins --schedule="*/3 * * * *" --restart=OnFailure \--image=alpine sleep 10Check the resource that was created:

kubectl get cronjobs

Cron Jobs in action

At the specified schedule, the Cron Job will create a Job

The Job will create a Pod

The Job will make sure that the Pod completes

(re-creating another one if it fails, for instance if its node fails)

- Check the Jobs that are created:kubectl get jobs

(It will take a few minutes before the first job is scheduled.)

What about that deprecation warning?

As we can see from the previous slide,

kubectl runcan do many thingsThe exact type of resource created is not obvious

To make things more explicit, it is better to use

kubectl create:kubectl create deploymentto create a deploymentkubectl create jobto create a jobkubectl create cronjobto run a job periodically

(since Kubernetes 1.14)

Eventually,

kubectl runwill be used only to start one-shot pods

Various ways of creating resources

kubectl run- easy way to get started

- versatile

kubectl create <resource>- explicit, but lacks some features

- can't create a CronJob before Kubernetes 1.14

- can't pass command-line arguments to deployments

kubectl create -f foo.yamlorkubectl apply -f foo.yaml- all features are available

- requires writing YAML

Viewing logs of multiple pods

When we specify a deployment name, only one single pod's logs are shown

We can view the logs of multiple pods by specifying a selector

A selector is a logic expression using labels

Conveniently, when you

kubectl run somename, the associated objects have arun=somenamelabel

- View the last line of log from all pods with the

run=pingponglabel:kubectl logs -l run=pingpong --tail 1

Streaming logs of multiple pods

- Can we stream the logs of all our

pingpongpods?

- Combine

-land-fflags:kubectl logs -l run=pingpong --tail 1 -f

Note: combining -l and -f is only possible since Kubernetes 1.14!

Let's try to understand why ...

Streaming logs of many pods

- Let's see what happens if we try to stream the logs for more than 5 pods

Scale up our deployment:

kubectl scale deployment pingpong --replicas=8Stream the logs:

kubectl logs -l run=pingpong --tail 1 -f

We see a message like the following one:

error: you are attempting to follow 8 log streams,but maximum allowed concurency is 5,use --max-log-requests to increase the limitWhy can't we stream the logs of many pods?

kubectlopens one connection to the API server per podFor each pod, the API server opens one extra connection to the corresponding kubelet

If there are 1000 pods in our deployment, that's 1000 inbound + 1000 outbound connections on the API server

This could easily put a lot of stress on the API server

Prior Kubernetes 1.14, it was decided to not allow multiple connections

From Kubernetes 1.14, it is allowed, but limited to 5 connections

(this can be changed with

--max-log-requests)For more details about the rationale, see PR #67573

Shortcomings of kubectl logs

We don't see which pod sent which log line

If pods are restarted / replaced, the log stream stops

If new pods are added, we don't see their logs

To stream the logs of multiple pods, we need to write a selector

There are external tools to address these shortcomings

(e.g.: Stern)

kubectl logs -l ... --tail N

If we run this with Kubernetes 1.12, the last command shows multiple lines

This is a regression when

--tailis used together with-l/--selectorIt always shows the last 10 lines of output for each container

(instead of the number of lines specified on the command line)

The problem was fixed in Kubernetes 1.13

See #70554 for details.

Party tricks involving IP addresses

It is possible to specify an IP address with less than 4 bytes

(example:

127.1)Zeroes are then inserted in the middle

As a result,

127.1expands to127.0.0.1So we can

ping 127.1to pinglocalhost!

(See this blog post for more details.)

More party tricks with IP addresses

We can also ping

1.11.1will expand to1.0.0.1This is one of the addresses of Cloudflare's public DNS resolver

This is a quick way to check connectivity

(if we can reach 1.1, we probably have internet access)

Accessing logs from the CLI

(automatically generated title slide)

Accessing logs from the CLI

The

kubectl logscommand has limitations:it cannot stream logs from multiple pods at a time

when showing logs from multiple pods, it mixes them all together

We are going to see how to do it better

Doing it manually

We could (if we were so inclined) write a program or script that would:

take a selector as an argument

enumerate all pods matching that selector (with

kubectl get -l ...)fork one

kubectl logs --follow ...command per containerannotate the logs (the output of each

kubectl logs ...process) with their originpreserve ordering by using

kubectl logs --timestamps ...and merge the output

Doing it manually

We could (if we were so inclined) write a program or script that would:

take a selector as an argument

enumerate all pods matching that selector (with

kubectl get -l ...)fork one

kubectl logs --follow ...command per containerannotate the logs (the output of each

kubectl logs ...process) with their originpreserve ordering by using

kubectl logs --timestamps ...and merge the output

We could do it, but thankfully, others did it for us already!

Stern

Stern is an open source project originally by Wercker.

From the README:

Stern allows you to tail multiple pods on Kubernetes and multiple containers within the pod. Each result is color coded for quicker debugging.

The query is a regular expression so the pod name can easily be filtered and you don't need to specify the exact id (for instance omitting the deployment id). If a pod is deleted it gets removed from tail and if a new pod is added it automatically gets tailed.

Exactly what we need!

Checking if Stern is installed

Run

stern(without arguments) to check if it's installed:$ sternTail multiple pods and containers from KubernetesUsage:stern pod-query [flags]If it's missing, let's see how to install it

Installing Stern

Stern is written in Go

Go programs are usually very easy to install

(no dependencies, extra libraries to install, etc)

Binary releases are available on GitHub

Stern is also available through most package managers

(e.g. on macOS, we can

brew install sternorsudo port install stern) k8s/logs-cli.md

Using Stern

There are two ways to specify the pods whose logs we want to see:

-lfollowed by a selector expression (like with manykubectlcommands)with a "pod query," i.e. a regex used to match pod names

These two ways can be combined if necessary

- View the logs for all the pingpong containers:stern pingpong

Stern convenient options

The

--tail Nflag shows the lastNlines for each container(Instead of showing the logs since the creation of the container)

The

-t/--timestampsflag shows timestampsThe

--all-namespacesflag is self-explanatory

- View what's up with the

weavesystem containers:stern --tail 1 --timestamps --all-namespaces weave

Using Stern with a selector

When specifying a selector, we can omit the value for a label

This will match all objects having that label (regardless of the value)

Everything created with

kubectl runhas a labelrunEverything created with

kubectl create deploymenthas a labelappWe can use that property to view the logs of all the pods created with

kubectl create deployment

- View the logs for all the things started with

kubectl create deployment:stern -l app

:EN:- Viewing pod logs from the CLI :FR:- Consulter les logs des pods depuis la CLI k8s/logs-cli.md

Cleanup

Let's cleanup before we start the next lecture!

- remove our deployment and cronjob:kubectl delete deployment/pingpong cronjob/sleep

k8smastery/cleanup-pingpong-sleep.md

Assignment 1: first steps

(automatically generated title slide)

Assignment 1: first steps

Answer these questions with the kubectl command you'd use to get the answer:

Cluster inventory

1.1. How many nodes does your cluster have?

1.2. What kernel version and what container engine is each node running?

(answers on next slide)

Answers

1.1. We can get a list of nodes with kubectl get nodes.

1.2. kubectl get nodes -o wide will list extra information for each node.

This will include kernel version and container engine.

Assignment 1: first steps

Control plane examination

2.1. List only the pods in the kube-system namespace.

2.2. Explain the role of some of these pods.

2.3. If there are few or no pods in kube-system, why could that be?

(answers on next slide)

Answers

2.1. kubectl get pods --namespace=kube-system

2.2. This depends on how our cluster was set up.

On some clusters, we might see pods named etcd-XXX, kube-apiserver-XXX: these correspond to control plane components.

It's also common to see kubedns-XXX or coredns-XXX: these implement the DNS service that lets us resolve service names into their ClusterIP address.

2.3. On some clusters, the control plane is located outside the cluster itself.

In that case, the control plane won't show up in kube-system, but you can find on host with ps aux | grep kube.

Assignment 1: first steps

Running containers

3.1. Create a deployment using kubectl create that runs the image bretfisher/clock and name it ticktock.

3.2. Start 2 more containers of that image in the ticktock deployment.

3.3. Use a selector to output only the last line of logs of each container.

(answers on next slide)

Answers

3.1. kubectl create deployment ticktock --image=bretfisher/clock

By default, it will have one replica, translating to one container.

3.2. kubectl scale deployment ticktock --replicas=3

This will scale the deployment to three replicas (two more containers).

3.3. kubectl logs --selector=app=ticktock --tail=1

All the resources created with kubectl create deployment xxx will have the label app=xxx.

If you needed to use a pod selector, you can see them in the resource that created them.

In this case that's the ReplicaSet, so kubectl describe replicaset ticktock-xxxxx would help.

Therefore, we use the selector app=ticktock here to match all the pods belonging to this deployment.

19,000 words

They say, "a picture is worth one thousand words."

The following 19 slides show what really happens when we run:

kubectl run web --image=nginx --replicas=3

Exposing containers

(automatically generated title slide)

Exposing containers

We can connect to our pods using their IP address

Then we need to figure out a lot of things:

how do we look up the IP address of the pod(s)?

how do we connect from outside the cluster?

how do we load balance traffic?

what if a pod fails?

Kubernetes has a resource type named Service

Services address all these questions!

Services in a nutshell

Services give us a stable endpoint to connect to a pod or a group of pods

An easy way to create a service is to use

kubectl exposeIf we have a deployment named

my-little-deploy, we can run:kubectl expose deployment my-little-deploy --port=80... and this will create a service with the same name (

my-little-deploy)Services are automatically added to an internal DNS zone

(in the example above, our code can now connect to http://my-little-deploy/)

Advantages of services

We don't need to look up the IP address of the pod(s)

(we resolve the IP address of the service using DNS)

There are multiple service types; some of them allow external traffic

(e.g.

LoadBalancerandNodePort)Services provide load balancing

(for both internal and external traffic)

Service addresses are independent from pods' addresses

(when a pod fails, the service seamlessly sends traffic to its replacement)

Many kinds and flavors of service

There are different types of services:

ClusterIP,NodePort,LoadBalancer,ExternalNameThere are also headless services

Services can also have optional external IPs

There is also another resource type called Ingress

(specifically for HTTP services)

Wow, that's a lot! Let's start with the basics ...

ClusterIP

It's the default service type

A virtual IP address is allocated for the service

(in an internal, private range; e.g. 10.96.0.0/12)

This IP address is reachable only from within the cluster (nodes and pods)

Our code can connect to the service using the original port number

Perfect for internal communication, within the cluster

LoadBalancer

An external load balancer is allocated for the service

(typically a cloud load balancer, e.g. ELB on AWS, GLB on GCE ...)

This is available only when the underlying infrastructure provides some kind of "load balancer as a service"

Each service of that type will typically cost a little bit of money

(e.g. a few cents per hour on AWS or GCE)

Ideally, traffic would flow directly from the load balancer to the pods

In practice, it will often flow through a

NodePortfirst

NodePort

A port number is allocated for the service

(by default, in the 30000-32767 range)

That port is made available on all our nodes and anybody can connect to it

(we can connect to any node on that port to reach the service)

Our code needs to be changed to connect to that new port number

Under the hood:

kube-proxysets up a bunch ofiptablesrules on our nodesSometimes, it's the only available option for external traffic

(e.g. most clusters deployed with kubeadm or on-premises)

Running containers with open ports

Since

pingdoesn't have anything to connect to, we'll have to run something elseWe could use the

nginxofficial image, but ...... we wouldn't be able to tell the backends from each other!

We are going to use

bretfisher/httpenv, a tiny HTTP server written in Gobretfisher/httpenvlistens on port 8888It serves its environment variables in JSON format

The environment variables will include

HOSTNAME, which will be the pod name(and therefore, will be different on each backend)

Creating a deployment for our HTTP server

We could do

kubectl run httpenv --image=bretfisher/httpenv...But since

kubectl runis changing, let's see how to usekubectl createinstead

- In another window, watch the pods (to see when they are created):kubectl get pods -w

Create a deployment for this very lightweight HTTP server:

kubectl create deployment httpenv --image=bretfisher/httpenvScale it to 10 replicas:

kubectl scale deployment httpenv --replicas=10

Exposing our deployment

- We'll create a default

ClusterIPservice

Expose the HTTP port of our server:

kubectl expose deployment httpenv --port 8888Look up which IP address was allocated:

kubectl get service

Services are layer 4 constructs

You can assign IP addresses to services, but they are still layer 4

(i.e. a service is not an IP address; it's an IP address + protocol + port)

This is caused by the current implementation of

kube-proxy(it relies on mechanisms that don't support layer 3)

As a result: you have to indicate the port number for your service

(with some exceptions, like

ExternalNameor headless services, covered later)

Testing our service

- We will now send a few HTTP requests to our pods

Run

shpodif not on Linux host so we can access internal ClusterIPkubectl attach --namespace=shpod -ti shpodLet's obtain the IP address that was allocated for our service, programmatically:

IP=$(kubectl get svc httpenv -o go-template --template '{{ .spec.clusterIP }}')

Send a few requests:

curl http://$IP:8888/Too much output? Filter it with

jq:curl -s http://$IP:8888/ | jq .HOSTNAME

ExternalName

Services of type

ExternalNameare quite differentNo load balancer (internal or external) is created

Only a DNS entry gets added to the DNS managed by Kubernetes

That DNS entry will just be a

CNAMEto a provided record

Example:

kubectl create service externalname k8s --external-name kubernetes.ioCreates a CNAME k8s pointing to kubernetes.io

External IPs

We can add an External IP to a service, e.g.:

kubectl expose deploy my-little-deploy --port=80 --external-ip=1.2.3.41.2.3.4should be the address of one of our nodes(it could also be a virtual address, service address, or VIP, shared by multiple nodes)

Connections to

1.2.3.4:80will be sent to our serviceExternal IPs will also show up on services of type

LoadBalancer(they will be added automatically by the process provisioning the load balancer)

Headless services

Sometimes, we want to access our scaled services directly:

if we want to save a tiny little bit of latency (typically less than 1ms)

if we need to connect over arbitrary ports (instead of a few fixed ones)

if we need to communicate over another protocol than UDP or TCP

if we want to decide how to balance the requests client-side

...

In that case, we can use a "headless service"

Creating a headless services

A headless service is obtained by setting the

clusterIPfield toNone(Either with

--cluster-ip=None, or by providing a custom YAML)As a result, the service doesn't have a virtual IP address

Since there is no virtual IP address, there is no load balancer either

CoreDNS will return the pods' IP addresses as multiple

ArecordsThis gives us an easy way to discover all the replicas for a deployment

Services and endpoints

A service has a number of "endpoints"

Each endpoint is a host + port where the service is available

The endpoints are maintained and updated automatically by Kubernetes

- Check the endpoints that Kubernetes has associated with our

httpenvservice:kubectl describe service httpenv

In the output, there will be a line starting with Endpoints:.

That line will list a bunch of addresses in host:port format.

Viewing endpoint details

When we have many endpoints, our display commands truncate the list

kubectl get endpointsIf we want to see the full list, we can use a different output:

kubectl get endpoints httpenv -o yamlThese IP addresses should match the addresses of the corresponding pods:

kubectl get pods -l app=httpenv -o wide

endpoints not endpoint

endpointsis the only resource that cannot be singular

$ kubectl get endpointerror: the server doesn't have a resource type "endpoint"This is because the type itself is plural (unlike every other resource)

There is no

endpointobject:type Endpoints structThe type doesn't represent a single endpoint, but a list of endpoints

The DNS zone

In the

kube-systemnamespace, there should be a service namedkube-dnsThis is the internal DNS server that can resolve service names

The default domain name for the service we created is

default.svc.cluster.local

Get the IP address of the internal DNS server:

IP=$(kubectl -n kube-system get svc kube-dns -o jsonpath={.spec.clusterIP})Resolve the cluster IP for the

httpenvservice:host httpenv.default.svc.cluster.local $IP

Ingress

Ingresses are another type (kind) of resource

They are specifically for HTTP services

(not TCP or UDP)

They can also handle TLS certificates, URL rewriting ...

They require an Ingress Controller to function

Cleanup

Let's cleanup before we start the next lecture!

- remove our httpenv resources:kubectl delete deployment/httpenv service/httpenv

Kubernetes network model

(automatically generated title slide)

Kubernetes network model

TL,DR:

Our cluster (nodes and pods) is one big flat IP network.

Kubernetes network model

TL,DR:

Our cluster (nodes and pods) is one big flat IP network.

In detail:

all nodes must be able to reach each other, without NAT

all pods must be able to reach each other, without NAT

pods and nodes must be able to reach each other, without NAT

each pod is aware of its IP address (no NAT)

pod IP addresses are assigned by the network implementation

Kubernetes doesn't mandate any particular implementation

Kubernetes network model: the good

Everything can reach everything

No address translation

No port translation

No new protocol

The network implementation can decide how to allocate addresses

IP addresses don't have to be "portable" from a node to another

(For example, We can use a subnet per node and use a simple routed topology)

The specification is simple enough to allow many various implementations

Kubernetes network model: the less good

Everything can reach everything

if you want security, you need to add network policies

the network implementation you use needs to support them

There are literally dozens of implementations out there

(15 are listed in the Kubernetes documentation)

Pods have level 3 (IP) connectivity, but services are level 4 (TCP or UDP)

(Services map to a single UDP or TCP port; no port ranges or arbitrary IP packets)

kube-proxyis on the data path when connecting to a pod or container,

and it's not particularly fast (relies on userland proxying or iptables)

Kubernetes network model: in practice

The nodes we are using have been set up to use kubenet, Calico, or something else

Don't worry about the warning about

kube-proxyperformanceUnless you:

- routinely saturate 10G network interfaces

- count packet rates in millions per second

- run high-traffic VOIP or gaming platforms

- do weird things that involve millions of simultaneous connections

(in which case you're already familiar with kernel tuning)

If necessary, there are alternatives to

kube-proxy; e.g.kube-router

The Container Network Interface (CNI)

Most Kubernetes clusters use CNI "plugins" to implement networking

When a pod is created, Kubernetes delegates the network setup to these plugins

(it can be a single plugin, or a combination of plugins, each doing one task)

Typically, CNI plugins will:

allocate an IP address (by calling an IPAM plugin)

add a network interface into the pod's network namespace

configure the interface as well as required routes, etc.

Multiple moving parts

The "pod-to-pod network" or "pod network":

provides communication between pods and nodes

is generally implemented with CNI plugins

The "pod-to-service network":

provides internal communication and load balancing

is generally implemented with kube-proxy (or maybe kube-router)

Network policies:

provide firewalling and isolation

can be bundled with the "pod network" or provided by another component

Even more moving parts

Inbound traffic can be handled by multiple components:

something like kube-proxy or kube-router (for NodePort services)

load balancers (ideally, connected to the pod network)

It is possible to use multiple pod networks in parallel

(with "meta-plugins" like CNI-Genie or Multus)

Some solutions can fill multiple roles

(e.g. kube-router can be set up to provide the pod network and/or network policies and/or replace kube-proxy)

Assignment 2: more about deployments

(automatically generated title slide)

Assignment 2: more about deployments

Create a deployment called

littletomcatusing thetomcatimage.What command will help you get the IP address of that Tomcat server?

What steps would you take to ping it from another container?

(Use the

shpodenvironment if necessary.)What command would delete the running pod inside that deployment?

What happens if we delete the pod that holds Tomcat, while the ping is running?

(answers on next two slides)

assignments/02kubectlexpose.md

Answers

kubectl create deployment littletomcat --image=tomcatList all pods with label

app=littletomcat, with extra details including IP address:kubectl get pods --selector=app=littletomcat -o wide. You could also describe the pod:kubectl describe pod littletomcat-XXX-XXXStart a shell inside the cluster: One way to start a shell inside the cluster:

kubectl apply -f https://k8smastery.com/shpod.yamlthenkubectl attach --namespace=shpod -ti shpod

A easier way is to use a special domain we created

curl https://shpod.sh | shThen the IP address of the pod should ping correctly. You could also start a deployment or pod temporarily (like nginx), then exec in, install ping, and ping the IP.

assignments/02kubectlexpose.md

Answers

We can delete the pod with:

kubectl delete pods --selector=app=littletomcator copy/paste the exact pod name and delete it.If we delete the pod, the following things will happen:

the pod will be gracefully terminated,

the ping command that we left running will fail,

the replica set will notice that it doens't have the right count of pods and create a replacement pod,

that new pod will have a different IP address (so the

pingcommand won't recover).

assignments/02kubectlexpose.md

Assignment 2: first service

What command can give our Tomcat server a stable DNS name and IP address?

(An address that doesn't change when something bad happens to the container.)

What commands would you run to curl Tomcat with that DNS address?

(Use the

shpodenvironment if necessary.)If we delete the pod that holds Tomcat, does the IP address still work?

(answers on next slide)

assignments/02kubectlexpose.md

Answers

We need to create a Service for our deployment, which will have a ClusterIP that is usable from within the cluster. One way is with

kubectl expose deployment littletomcat --port=8080(The Tomcat image is listening on port 8080 according to Docker Hub). Another way is withkubectl create service clusterip littletomcat --tcp 8080In the

shpodenvironment that we started earlier:# Install curlapk add curl# Make a request to the littletomcat service (in a different namespace)curl http://littletomcat.default:8080Note that shpod runs in the shpod namespace, so to find a DNS name of a different namespace in the same cluster, you should use

<hostname>.<namespace>syntax. That was a little advanced, so A+ if you got it on the first try!Yes. If we delete the pod, another will be created to replace it. The ClusterIP will still work.

(Except during a short period while the replacement container is being started.)

assignments/02kubectlexpose.md

Our sample application

(automatically generated title slide)

What's this application?

What's this application?

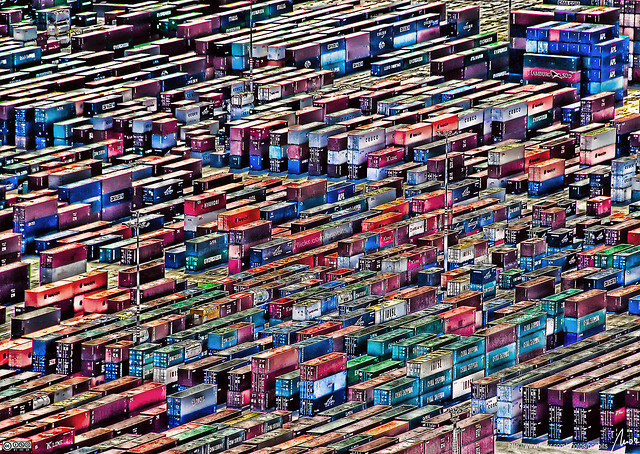

- It is a DockerCoin miner! 💰🐳📦🚢

What's this application?

It is a DockerCoin miner! 💰🐳📦🚢

No, you can't buy coffee with DockerCoins

What's this application?

It is a DockerCoin miner! 💰🐳📦🚢

No, you can't buy coffee with DockerCoins

How DockerCoins works:

generate a few random bytes

hash these bytes

increment a counter (to keep track of speed)

repeat forever!

What's this application?

It is a DockerCoin miner! 💰🐳📦🚢

No, you can't buy coffee with DockerCoins

How DockerCoins works:

generate a few random bytes

hash these bytes

increment a counter (to keep track of speed)

repeat forever!

DockerCoins is not a cryptocurrency

(the only common points are "randomness," "hashing," and "coins" in the name)

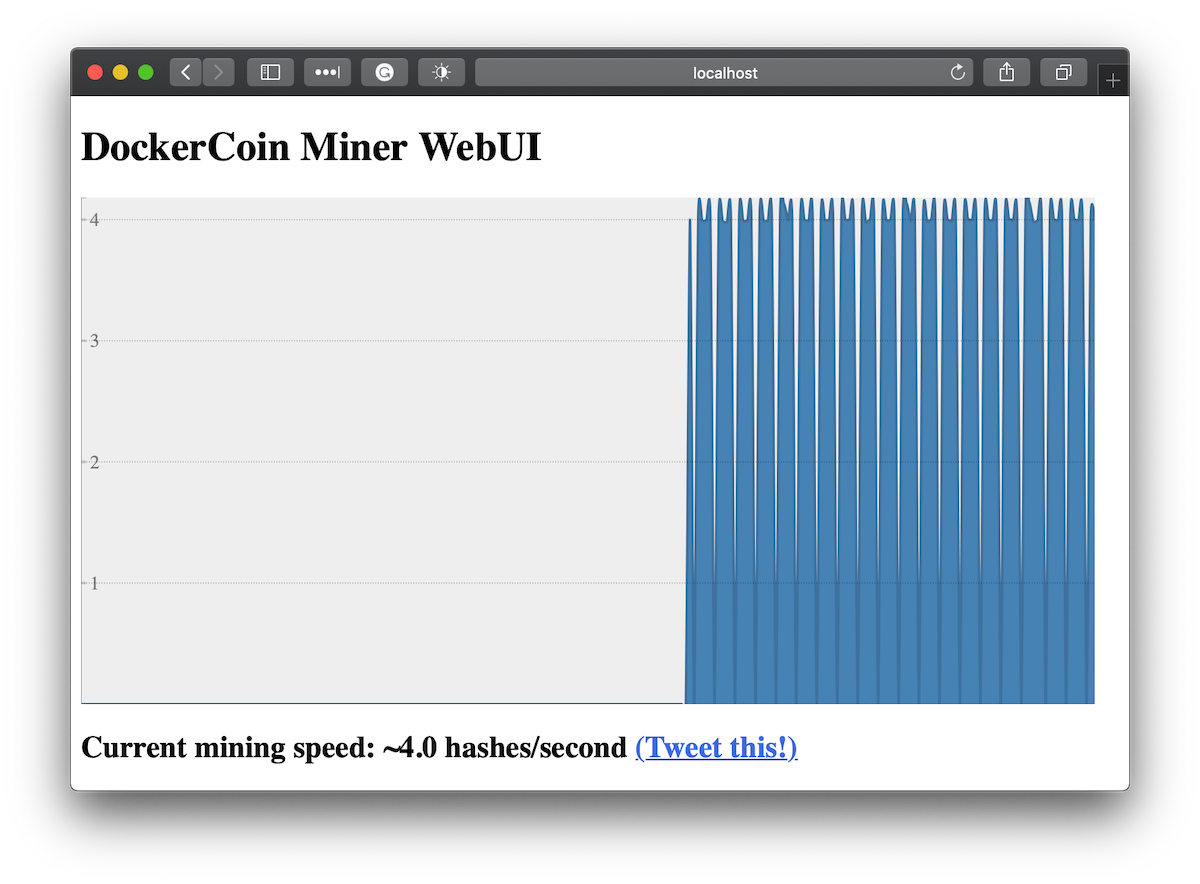

DockerCoins in the microservices era

DockerCoins is made of 5 services:

rng= web service generating random byteshasher= web service computing hash of POSTed dataworker= background process callingrngandhasherwebui= web interface to watch progressredis= data store (holds a counter updated byworker)

These 5 services are visible in the application's Compose file, dockercoins-compose.yml

How DockerCoins works

workerinvokes web servicerngto generate random bytesworkerinvokes web servicehasherto hash these bytesworkerdoes this in an infinite loopEvery second,

workerupdatesredisto indicate how many loops were donewebuiqueriesredis, and computes and exposes "hashing speed" in our browser

(See diagram on next slide!)

Service discovery in container-land

How does each service find out the address of the other ones?

Service discovery in container-land

How does each service find out the address of the other ones?

We do not hard-code IP addresses in the code

We do not hard-code FQDNs in the code, either

We just connect to a service name, and container-magic does the rest

(And by container-magic, we mean "a crafty, dynamic, embedded DNS server")

Example in worker/worker.py